Kolena is a comprehensive machine learning testing and debugging platform to surface hidden model behaviors and take the mystery out of model development. Kolena helps you:

- Perform high-resolution model evaluation

- Understand and track behavioral improvements and regressions

- Meaningfully communicate model capabilities

- Automate model testing and deployment workflows

Kolena organizes your test data, stores and visualizes your model evaluations, and provides tooling to craft better

tests. You interface with it through the web at app.kolena.com and programmatically via the

kolena Python client.

Why Kolena?#

TL;DR

Kolena helps you test your ML models more effectively.

Jump right in with the

Current ML evaluation techniques are falling short. Engineers run inference on arbitrarily split benchmark datasets, spend weeks staring at error graphs to evaluate their models, and ultimately produce a global metric that fails to capture the true behavior of the model.

Models exhibit highly variable performance across different subsets of a domain. A global metric gives you a high-level picture of performance but doesn't tell you what you really want to know: what sort of behavior can I expect from my model in production?

To answer this question you need a higher-resolution picture of model performance. Not "how well does my model perform on class X," but "in what scenarios does my model perform well for class X?"

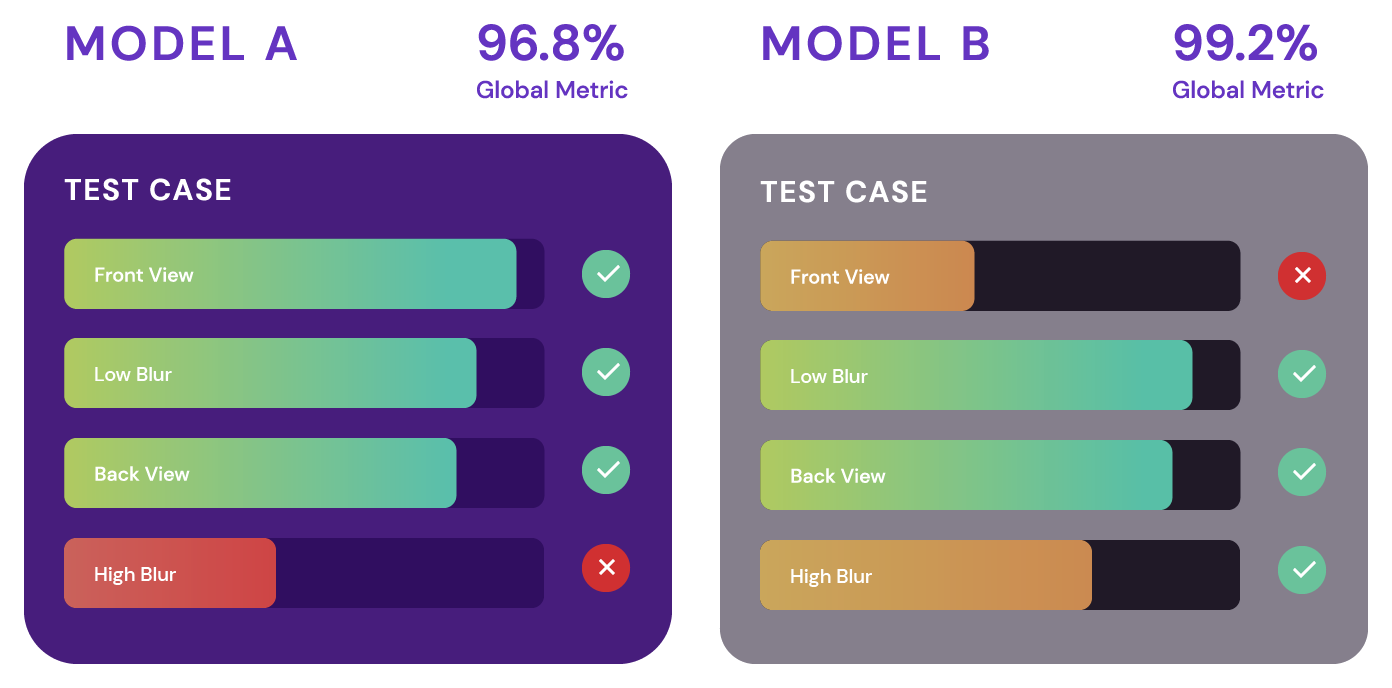

In the above example, looking only at global metric (e.g. F1 score), we'd almost certainly choose to deploy Model B.

But what if the "High Blur" scenario isn't important for our product? Most of Model A's failures are from that scenario, and it outperforms Model B in more important scenarios like "Front View." Meanwhile, Model B's underperformance in "Front View," a highly important scenario, is masked by improved performance in the unimportant "High Blur" scenario.

Test data is more important than training data!

Everything you know about a new model's behavior is learned from your tests.

Fine-grained tests teach you what you need to learn before a model hits production.

Now... why Kolena? Two reasons:

- Managing fine-grained tests is a tedious data engineering task, especially under changing data circumstances as your dataset grows and your understanding of your domain develops

- Creating fine-grained tests is labor-intensive and typically involves manual annotation of countless images, a costly and time-consuming process

We built Kolena to solve these two problems.

Read More#

- Best Practices for ML Model Testing (Kolena Blog)

- Hidden Stratification Causes Clinically Meaningful Failures in Machine Learning for Medical Imaging (arXiv:1909.12475)

- No Subclass Left Behind: Fine-Grained Robustness in Coarse-Grained Classification Problems (arXiv:2011.12945)

Developer Guide#

Learn how to use Kolena to test your models effectively:

-

Run through an example using Kolena to set up rigorous and repeatable model testing in minutes.

-

Install and initialize the

kolenaPython package, the programmatic interface to Kolena. -

Core concepts for testing in Kolena.

-

Developer-focused detailed API reference documentation for

kolena.